Introduction

Deep Learning, Machine Learning, A.I.

Deep Learning is a particular type of machine learning method, and is thus part of the broader field of artificial intelligence (using computers to reason). Deep learning is another name for artificial neural networks, which are inspired by the structure of the neurons in the cerebral cortex.

The recent quantum leap in machine learning has solely been driven by deep learning successes. When you read or hear about AI or machine Learning successes in recent years, it really means Deep Learning successes.

Machine Learning can be split into 4 main fields: Supervised Learning, Unsupervised Learning, Reinforcement Learning and Generative Models.

Figure 0.1: AI, ML and Deep Learning.

Supervised Learning

Supervised Learning is by far the most common application in ML (say 95% of the research papers). We assume that we have collected some data. Namely, we have collected \(p\) features from each of the \(n\) observations of our dataset (eg. pictures, or user actions on a website). For each of these observation feature vectors \({\bf x}_i\), we also know the outcome \(y_i\) (eg. \(y_i=0,1\) depending on whether the picture shows a dog or a cat). From this labelled dataset \(({\bf x}_i, y_i)\), we want to estimate the parameters \({\bf w}\) of a predictive model \(f({\bf x}_i, {\bf w})=y_i\).

Figure 0.2: Example of Supervised Learning Task: Image Classification

The task of Supervised Learning is thus to find a model that can predict the outcome from input features.

Unsupervised Learning

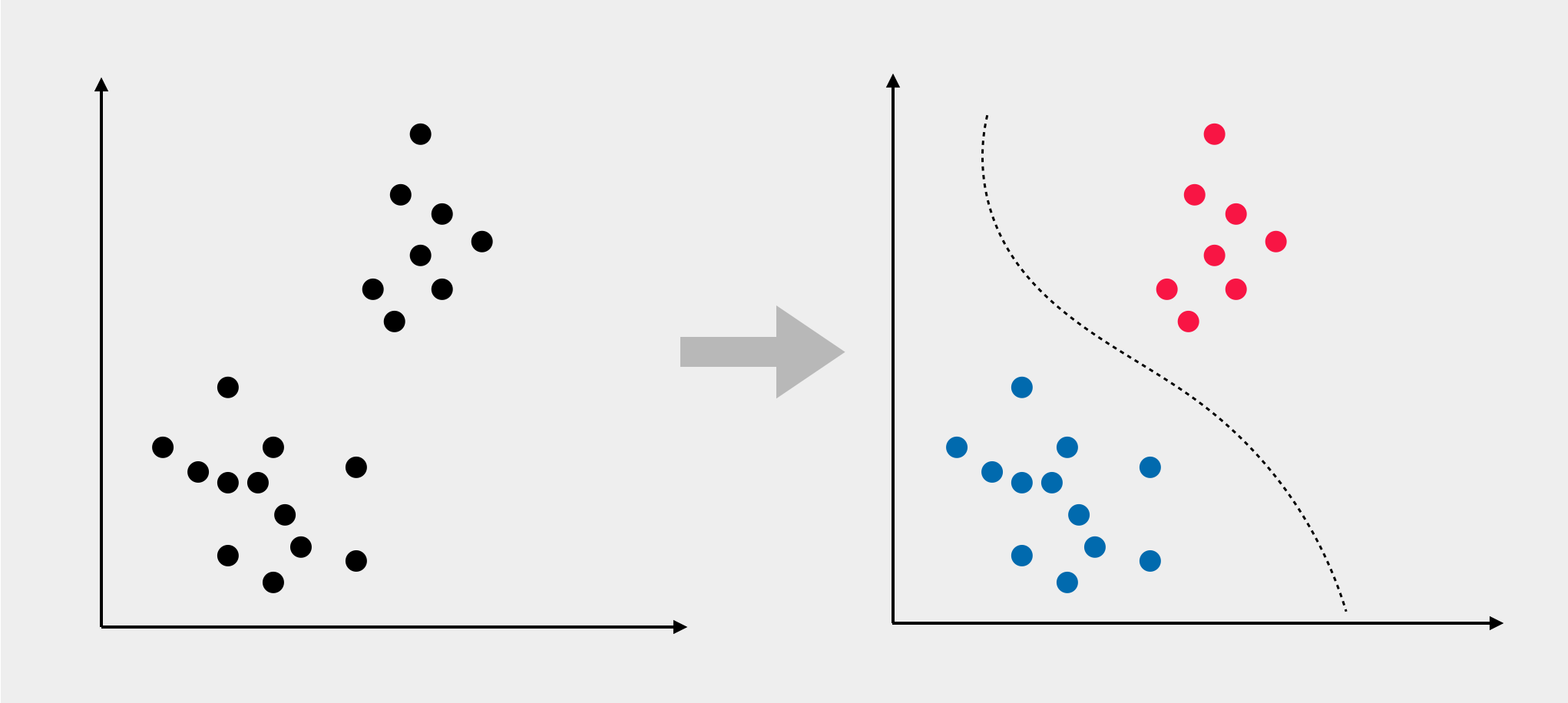

The task is here to learn about a dataset \(({\bf x}_i)\) by just looking at it, without any labelled information \(y_i\). For instance, a typical application of unsupervised learning is to cluster a dataset into smaller groups of observations that seem to share similar feature vectors. Hopefully the clusters mean something useful that can be exploited afterwards.

Figure 0.3: Example of Unsupervised Learning Task: Clustering

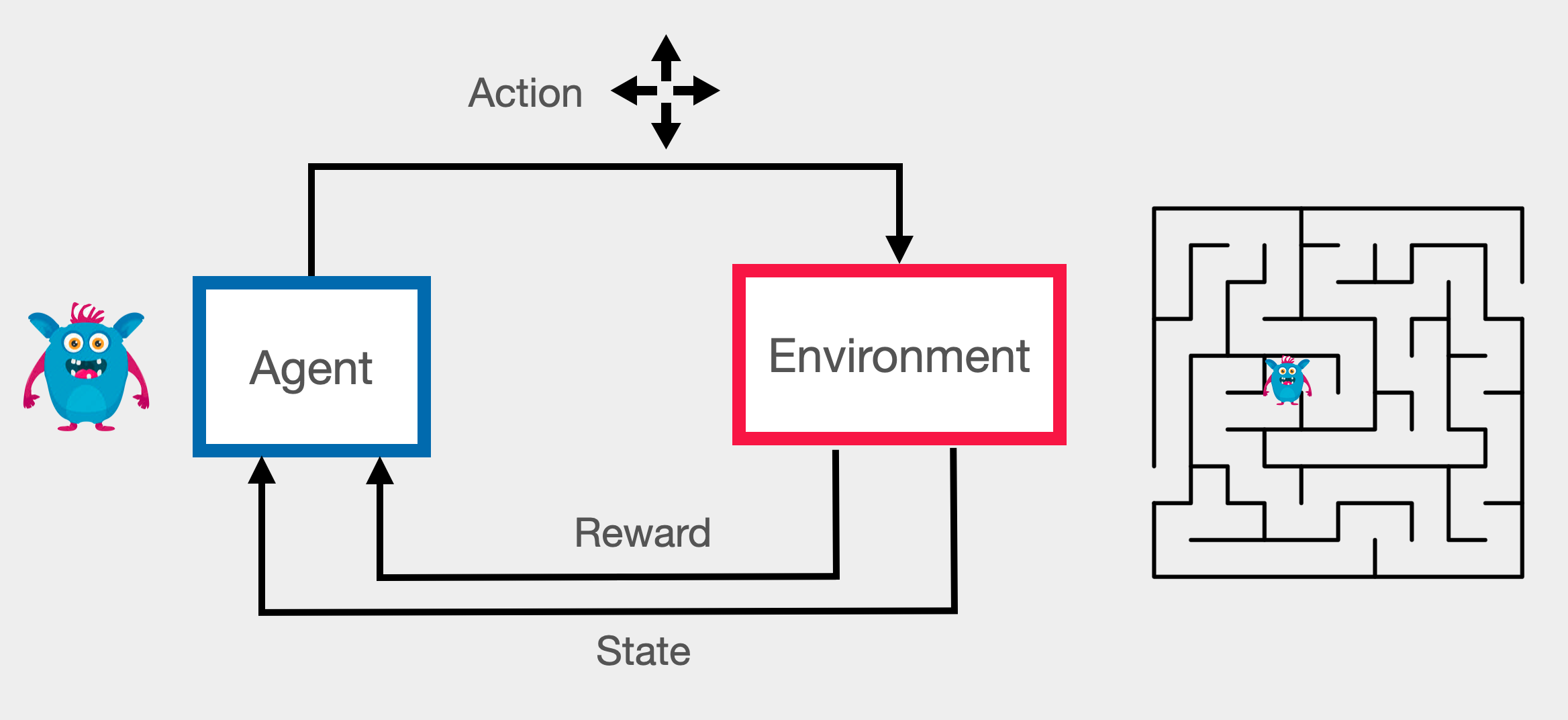

Reinforcement Learning

This task is about teaching an agent how to interact with its environment (the data), so as to get maximum reward. Reinforcement Learning (RL) is used in applications such as game playing, robotics, etc. Despite its potentially broad range of applications, Reinforcement Learning only makes up a tiny fraction of ML applications and research papers. This is because RL is particularly slow and tricky to train.

Figure 0.4: Reinforcement Learning

Generative Models

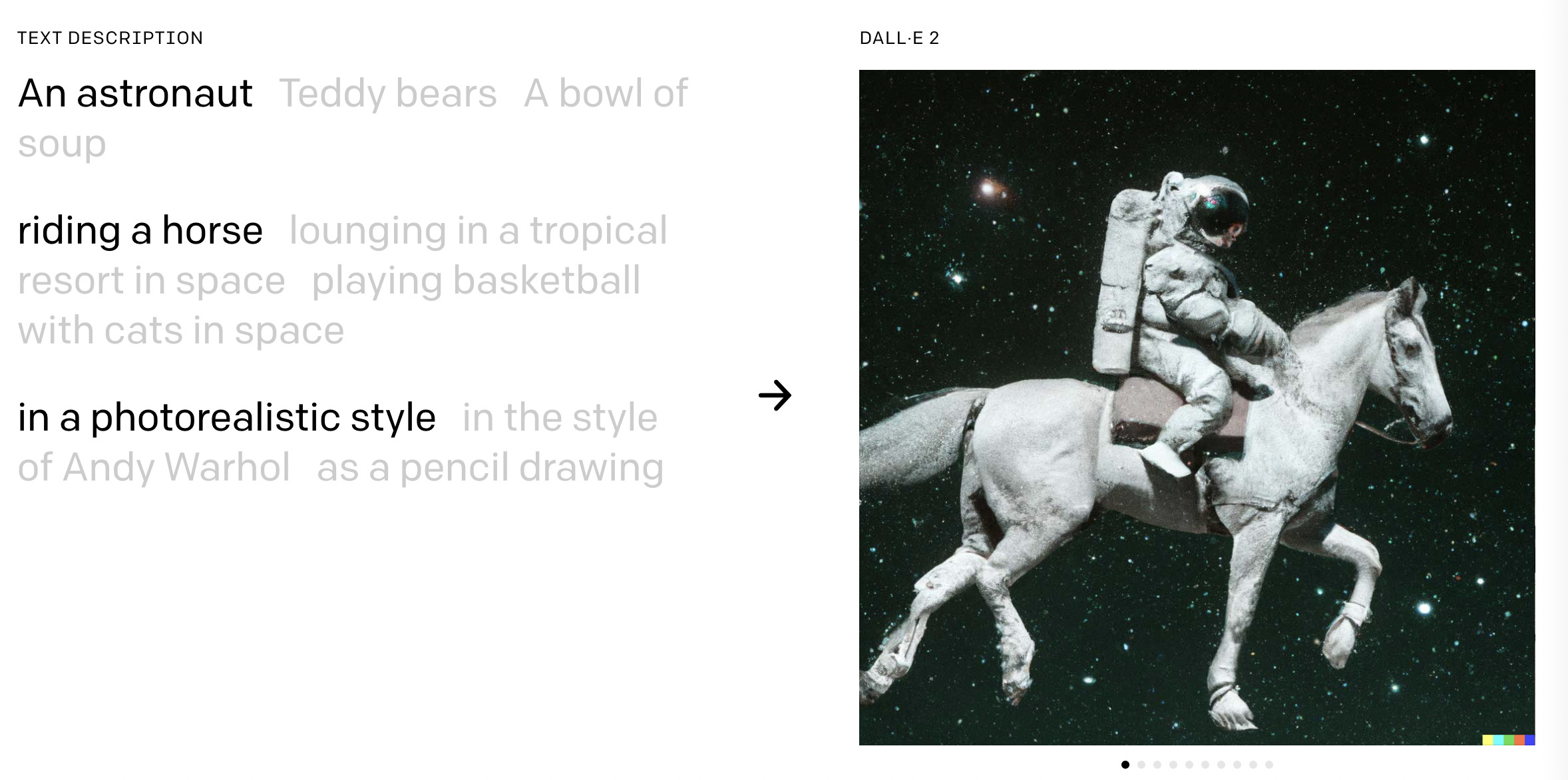

Generative models is a new emergent direction of machine learning. The aim is here to generate text, images, or other media. Mathematically, we try to model the conditional probability of the observable \({\bf x}\), given a target \(y\): \({\bf x} \sim p({\bf x}| y)\). This is your ChatGPT, DallE, Stable Diffusions, etc.

Figure 0.5: Example of Generative AI with DALLE2

Deep Learning has made major breakthroughs in all three fields. So much so that virtually all research papers in these field rely on neural networks.

Early Deep Learning Successes

Image Classification

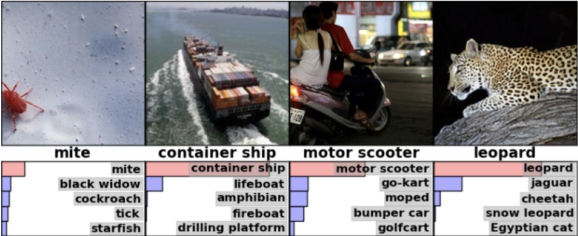

The success story of Deep Learning really start in 2012 with Image Classification, also known as Image Recognition, which is one of the core applications of Computer Vision, and arguably the birthplace of modern Deep Learning. Image recognition used to be a particularly challenging task of computer vision. See for instance the 2014 comic by xkcd:

The generally adopted approach was to compute a collection of image features that people found to be useful and then apply the most popular classification method at that the time.

ImageNet runs an annual challenge where software programs compete to correctly classify and detect objects and scenes in images. Up to 2012, support vector machine (SVM) was the method of choice for classification.

Figure 0.6: Image Net image classification challenge.

In 2012, it was shown (Krizhevsky, Sutskever, and Hinton 2012) that deep learning models could massively drop the object recognition error rate. This paper has caught the attention of the image recognition community and beyond. The paper is surprisingly not technically revolutionary. Neural Networks had been indeed around for a few decades, without attracting too much momentum. This time however, the significance of improvement could not be left unnoticed. Since then, all submissions to the competition are based on neural networks, making year on year incremental progress. So much so that machines can now do better than humans in this specific task. In 2014, the then PhD student Andrej Karparthy manually entered the competition and obtained a 5% error rate). For reference, the 2022 winning entry achieved a less than 1% error rate

Figure 0.7: Historical error Rates at ImageNet’s classification challenge between 2010 and 2015. (see full leaderboard)

Scene Understanding

These new achievements in image recognition were then brought to nearby fields such Scene Understanding. The figure below show the semantic segmentation results from Mask R-CNN (He et al. 2017). Each pixel can be automatically be associated with a particular class (eg. human, train, car, etc.).

![Results from Mask R-CNN. [@maskrcnn]](figures/MaskRCNN.jpg)

Figure 0.8: Results from Mask R-CNN. (He et al. 2017)

Image Captioning

By combining image models with language models, we were as soon as 2014 able to automatically generate captions from images using a single end-to-end neural network model (see Figure below).

![Results of automaated image captioning [@showandtell]. See [Google Reserach blog entry](https://goo.gl/U88bDQ)](figures/captioning.png)

Figure 0.9: Results of automaated image captioning (Vinyals et al. 2015). See Google Reserach blog entry

Machine Translation

Also by 2014, the deep learning revolution had spread beyond computer vision and made its introduction in natural language processing.

All major tech companies rapidly changed their machine translation systems to use Deep Learning. For instance, Google used to average a yearly 0.4% improvement on their machine translation system. Their first attempt at using Deep Learning yielded an overnight 7% improvement, more than in an entire lifetime! (see New York Times’s “The Great AI Awakening”).

Several years of handcrafted development could not match a single initial deep learning implementation.

Note that since then, Large Language Models (LLM) have further revolutionised text processing.

These models contain hundreds of billions of parameters and have been trained to predict text on a extremely large corpus of Internet sources, made of hundreds of billion words, and sometimes dozen of languages.

OpenAI’s GPT·3 (Brown et al. 2020) is perhaps one of the most famous of these large language model and has been adopted in hundreds of applications, ranging from grammar correction, translation, summarisation, Chat Bots, text generation, etc. (see examples).

Multimedia Content

Deep Learning has quickly become the universal language for dealing with any content driven application.

Already in Skype Translator (2014), speech recognition, automatic machine translation and speech synthesis tasks are combined, with Deep Learning acting as the glue that holds these elements together.

Game Playing

Deep learning has also been introduced in reinforcement learning to solve complex sequential decision making problems.

Recent successes include: playing old Atari computer games, programming real world Robots and beating humans at Go. One notable event has the victory of AlphaGo by DeepMind over the humans.

Reasons of a Success

Neural Networks have been around for decades. But is only now that it surpasses all other machine learning techniques. Deep Learning is now a disruptive technology that has been unexpectedly taking over operations of technology companies around the world. By the way, the word disruptive is not an overstatement.

``The revolution in deep nets has been very profound, it definitely surprised me, even though I was sitting right there.’’.

— Sergey Brin, Google co-founder

So, why now?

Because Deep Learning does scale.

Neural Nets are the only ML technique whose performance scales efficiently with the training data size. Other ML popular techniques used up to that point just can’t scale that well.

The advent of big databases, combined with cheaper computing power (Graphic Cards), meant that Deep Learning could take advantage of all this, whilst other techniques stagnated. Instead of using thousands of observations, Deep Learning can take advantage of billions.

The tipping point was 2012 in Computer Vision and around 2014 in Machine Translation.

Global Reach

Deep Learning has since then been applied successfully to many fields of research, industry and society: self-driving cars, image recognition, detecting cancer, speech recognition, speech synthesis, machine translation, molecule modelling (see DeepMind’s AlphaFold project), drug discovery and toxicology, customer relationship management, recommendation systems, bioinformatics, advertising, controlling lasers, etc.

Genericity and Systematicity

One of the reasons of the success is that by adopting an automated optimisation approach to tuning algorithms, Deep Learning is able to surpass hand-tailored algorithms of skilled researchers.

It offers a genereic, systematic approach to any problem, when before, specialised algorithms took years of expert human efforts to design.

Simplicity and Democratisation

Deep Learning offers a relatively simple framework to define and parametrise pretty much any kind of numerical method and then optimise it over massive databases.

Programmers can train state of the art neural nets, without having done 10+ years of research in the domain. Modern AI toolchains (see openAI prompt) can even build full software solutions by taking a simple description of what we want to achieve using a plain english prompt.

It is an opportunity for start-ups and it has become a ubiquitous tool in tech companies.

Impact

Here is a question for you: how long before your future job gets replaced by an algorithm? Probably much sooner than you think. You might feel safe if you are an artist…

… but then again:

![Automatic style transfer, based on [@gatys2015neural]](figures/styletransfer-2.jpeg)

Figure 0.10: Automatic style transfer, based on (Gatys, Ecker, and Bethge 2015)

… and now large neural networks such as DALL·E 2 (Ramesh et al. 2022) are capable of combining text and images to produce incredibly creative and high quality pieces of art:

's picture creation from a text description: "Teddy bears mixing sparkling chemicals as mad scientists as a 1990s Saturday morning cartoon" (see [https://openai.com/dall-e-2/](https://openai.com/dall-e-2/))](figures/dalle2-teddybears.jpg)

Figure 0.11: OpenAI’s DALL·E 2’s picture creation from a text description: “Teddy bears mixing sparkling chemicals as mad scientists as a 1990s Saturday morning cartoon” (see https://openai.com/dall-e-2/)

A Neural Algorithm of Artistic Style. L. Gatys, A. Ecker, M. Bethge. 2015. https://arxiv.org/abs/1508.06576

Does an AI need to make love to Rembrandt’s girlfriend to make art? https://goo.gl/gi7rWE

Intelligent Machines: AI art is taking on the experts. https://goo.gl/2kfyXd

Job concerns are a real thing.

In Summary

Even if self-driving cars are not quite here yet, and that no, computers have not yet become self-aware, Deep Learning is definitely an ongoing revolution that is having a profound impact on the way we do research and engineering, and it is making its way in all parts of society. The increased awareness of the societal and ethical concerns rised by the use at scale of these new technologies are further evidence of the significance of this revolution.

In the following chapters of these lecture notes, we will look into the essential concepts of Machine Learning (PART I), then dive into the fundamentals of Neural Networks (PART II) and recent advances (PART III).