Introduction

Deep Learning, Machine Learning, and A.I.

As you begin this module, you are witnessing a pivotal moment in a technological and societal revolution, one driven by what is now universally called Artificial Intelligence (AI). However, how we arrived at this term is a curious trajectory. Around We started around 2012-2013, with the term Deep Learning (DL). Soon after, the broader and more established academic term Machine Learning (ML) became more common. Today, we have largely settled on AI, a catch-all term that is now globally used. Let us untangle these terms. They are not interchangeable; rather, they represent a nested hierarchy of concepts.

Artificial Intelligence is the broadest and oldest concept, born in the 1950s. The original ambition was to create machines capable of human-like intelligence in its entirety—reasoning, planning, learning, and natural language understanding. For decades, the dominant approach to AI, often called “Symbolic AI” or “Good Old-Fashioned AI” (GOFAI), relied on explicitly programming computers with hand-coded rules and logic. The machine was “intelligent” because a human had manually encoded knowledge and decision-making processes into it.

Beginning in the 1980s and gaining significant momentum through the 1990s and 2000s, a fundamentally different approach emerged: Machine Learning (ML).

At its heart, ML is about fitting mathematical models to data, a concept you’ve likely already encountered in statistics with good old regression. In regression, the goal is to find the parameters (e.g., the slope m and intercept b for a line y=mx+b) that create the best possible fit to your data. Machine Learning generalises this powerful idea. Instead of just lines, we can work with far more complex models, but the principle is the same: we use data to tune the model’s parameters automatically. Rather than explicitly programming rules, we let the machine discover the rules by learning the patterns directly from examples. This data-driven approach is a significant cultural and methodological shift.

While we often think of “machine learning” as a term from computer science, the underlying principles and techniques have been developed across many disciplines. For anyone working with numerical data, the need for analytical tools is universal. Many fields, including statistics and signal processing, have contributed to and benefited from the development of these methods. This interdisciplinary nature has led to some political friction. For instance, many statisticians might view much of modern ML as “applied statistics” or refer to it as Statistical Learning, emphasising its deep roots in their field.

This brings us to the focus of this module: Deep Learning (DL). Deep Learning is a specific subfield of Machine Learning. It is not a new idea—its core concepts have existed for decades, with scientists such as McCulloch and Pitts (1943), Rosenblatt (1958) and Joseph (1960) introducing the ideas of artificial neurons, perceptrons, and multilayer perceptrons. But it remained an essentially fringe domain for decades and it is only in the 2010s that it became a practical and dominant method.

The defining feature of Deep Learning is its use of deep Artificial Neural Networks—architectures with multiple layers of interconnected nodes, loosely inspired by the structure of the human brain.

The relationship between these fields can be summarised as:

\text{Deep Learning} \subset \text{Machine Learning} \subset \text{AI}

Note that while deep learning was technically always a part of the broader AI research field, it was a fringe area, and its major breakthrough papers happened first in the fields of computer vision, image processing, audio processing and natural language processing, which were external to AI. Their success was so profound that it revitalised the term AI, giving it a new meaning. So much so, that whenever you hear about a AI today—whether in self-driving cars, medical diagnostics, or natural language translation—you can be almost certain that it is, in fact, powered by Deep Learning.

Main Areas of Machine Learning

Machine learning itself can be broadly categorised into four main areas, each addressing different types of problems: Supervised Learning, Unsupervised Learning, Reinforcement Learning, and Generative Models. Deep Learning has had a major impact on all of them.

Supervised Learning

Supervised learning is the most common type of machine learning, accounting for a vast majority of research and applications. In supervised learning, we start with a dataset that has been labelled with the correct outcomes. For example, we might have a collection of images, where each image is labeled as either a “dog” or a “cat.” The goal is to train a model that can learn the relationship between the input data (the images) and the corresponding labels.

Mathematically, we have a dataset of n observations, where each observation consists of a feature vector {\bf x}_i (e.g., the pixels of an image) and a known outcome y_i (e.g., 0 for a dog, 1 for a cat). The task is to learn a function f from that labelled dataset ({\bf x}_i, y_i)_{i=1,\dots,n} that can predict the outcome for a new, unseen input: f({\bf x}_j, {\bf w})=y_j. This is achieved by estimating the parameters {\bf w} of the model f({\bf x}, {\bf w}).

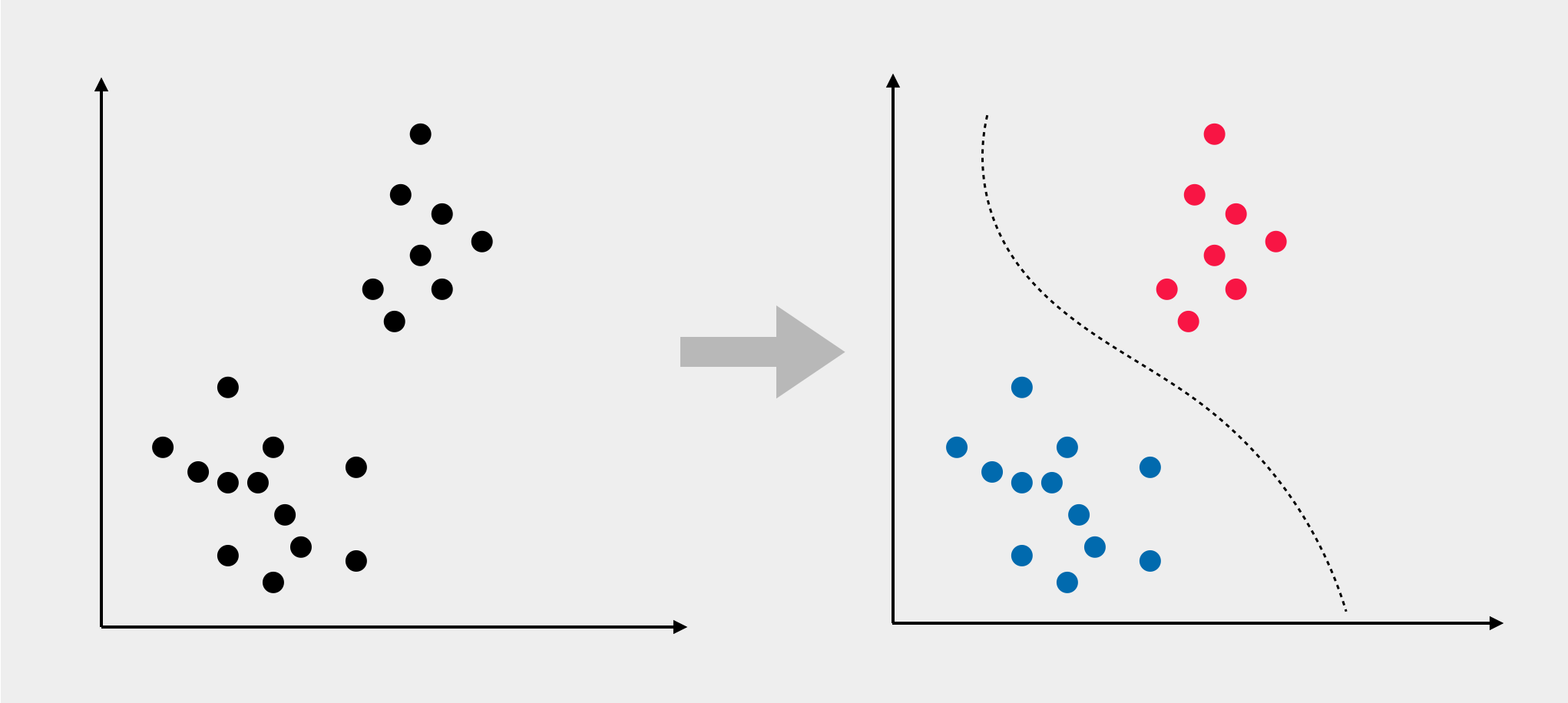

Unsupervised Learning

In unsupervised learning, the goal is to find patterns and structure in a dataset ({\bf x}_i) without the help of any pre-existing labels. A common application is clustering, where the algorithm groups similar data points together. For example, an e-commerce website could use clustering to segment its customers into different groups based on their purchasing behavior. These clusters can then be used for targeted marketing campaigns.

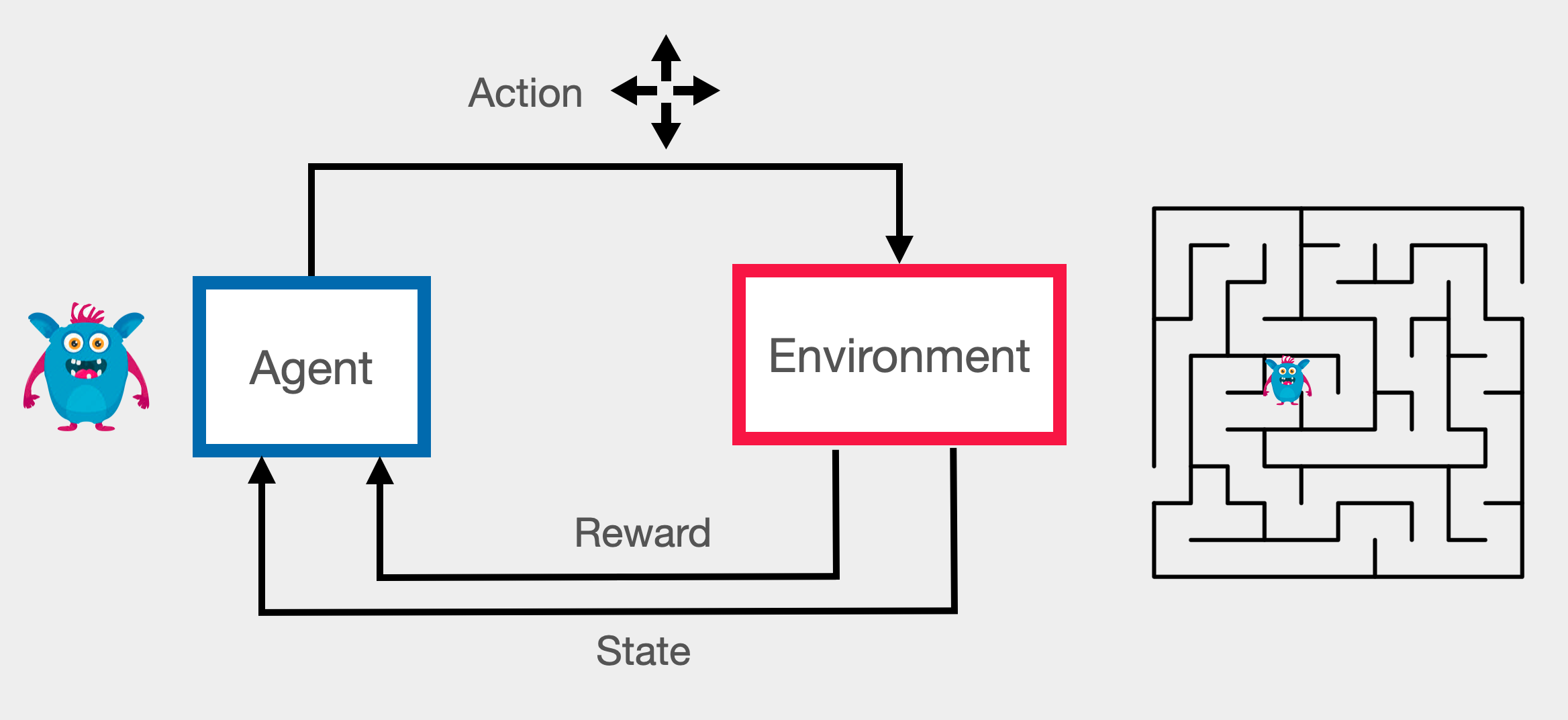

Reinforcement Learning

Reinforcement learning (RL) is about training an agent to make a sequence of decisions in an environment to maximise a cumulative reward. The agent learns through trial and error, receiving feedback in the form of rewards or penalties for its actions. RL is the basis for training models to play games like chess and Go, as well as for robotics applications where a robot learns to navigate its surroundings. While powerful, RL can be complex and data-intensive to implement, which is why it is less common than supervised or unsupervised learning.

Generative Models

Generative models are a rapidly advancing area of machine learning focused on creating new content. These models learn the underlying distribution of a dataset and can then generate new samples that are similar to the original data. This includes generating realistic images, writing human-like text, and composing music.

Mathematically, we try to model the conditional probability of the observable {\bf x}, given a target y: {\bf x} \sim p({\bf x}| y). This is your ChatGPT, Midjourney, Stable Diffusions, etc.

Deep Learning has made major breakthroughs in all four of these fields. As a result, neural networks have become the dominant tool in virtually all areas of machine learning research.

Early Deep Learning Successes

Image Classification

The story of Deep Learning’s success began in 2012 with Image Classification, also known as Image Recognition. This core task in Computer Vision is arguably the birthplace of modern Deep Learning. For years, image recognition was a notoriously difficult problem. The prevailing approach involved manually engineering a set of image features and then feeding them into a classification algorithm. The 2014 comic from xkcd illustrates this challenge:

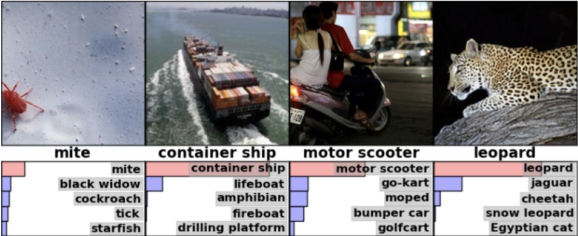

The ImageNet Large Scale Visual Recognition Challenge (ILSVRC) is an annual competition which benchmarks the performance of image recognition algorithms. Before 2012, methods like Support Vector Machines (SVMs) were the top performers.

In 2012, a deep learning model called AlexNet (Krizhevsky, Sutskever, and Hinton 2012) dramatically reduced the error rate for object recognition, capturing the attention of the computer vision community and beyond. While neural networks had existed for decades, the scale of this improvement was undeniable. Since then, every winning entry in the ImageNet competition has been based on a deep neural network, with each year bringing further incremental progress. Today, machines have surpassed human performance on this specific task. In 2014, Andrej Karpathy, then a PhD student, manually classified a subset of the ImageNet dataset and achieved a 5% error rate. For comparison, the winning entry in 2022 had an error rate of less than 1%.

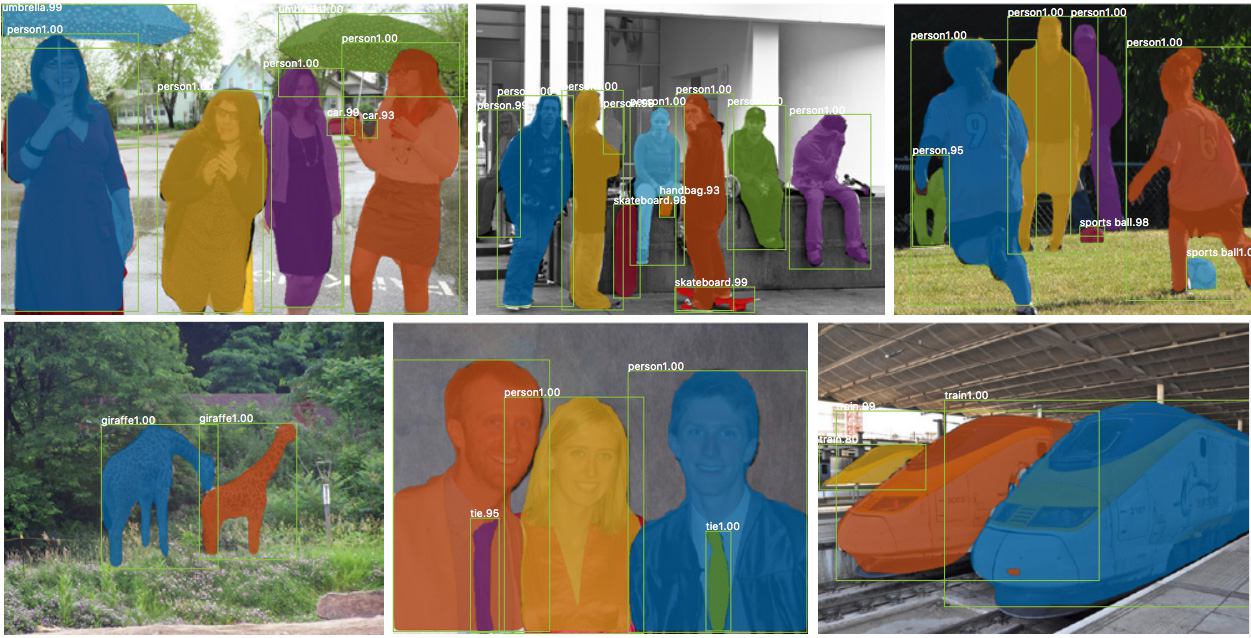

Scene Understanding

The advancements in image recognition quickly spread to related fields like Scene Understanding. The figure below shows the results of Mask R-CNN (He et al. 2017), a deep learning model that can perform semantic segmentation. This means it can classify every pixel in an image, associating it with a specific object class like “human,” “train,” or “car.”

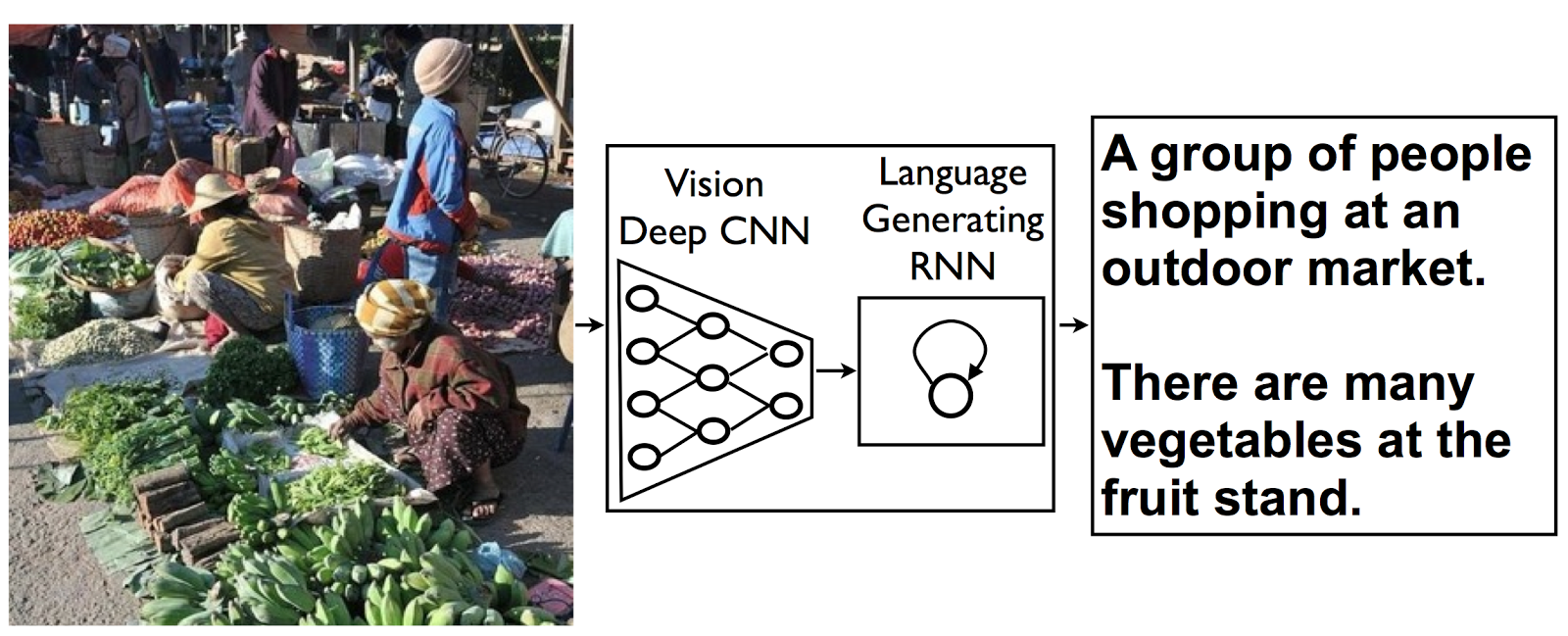

Image Captioning

By 2014, researchers were combining deep learning models for vision and language to automatically generate captions for images. A single, end-to-end neural network could now take an image as input and produce a descriptive sentence as output.

Machine Translation

The deep learning revolution also transformed the field of Natural Language Processing (NLP). By 2014, major tech companies were replacing their existing machine translation systems with deep learning models. Google, for example, had been seeing an average annual improvement of 0.4% on its translation service. Their first implementation of a deep learning-based system resulted in a 7% improvement overnight—more than the cumulative progress of a decade of work. This story is detailed in the New York Times article, “The Great AI Awakening”.

Years of handcrafted feature engineering were rendered obsolete overnight by a simple deep learning model.

Since then, the development of Large Language Models (LLMs) has further revolutionised text processing. These models, with hundreds of billions of parameters, are trained on vast amounts of text from the internet, often in multiple languages.

With the release of OpenAI’s GPT-3 in June of 2020, the revolution went mainstream. GPT-3 became a household name and brought the capabilities of LLMs to a global audience.

Multimedia Content

Deep Learning has become a universal tool for applications that involve multiple types of media. As early as 2014, Microsoft show cased how speech recognition, machine translation, and speech synthesis could be combined into a single, seamless experience.

Game Playing

Deep learning has also been successfully applied to reinforcement learning, enabling the solution of complex sequential decision-making problems. This has led to remarkable achievements, such as training agents to play Atari games, controlling real-world robots, and defeating human champions at the game of Go. In March 2016, the victory of DeepMind’s AlphaGo over the world’s top Go, Lee Sedol, was a landmark event in the history of A.I.

Reasons for Success

Neural networks have been around for decades, but by 2013 that they started to surpass all other machine learning techniques. Deep learning has become a disruptive technology that has fundamentally changed the operations of technology companies worldwide. This is not an overstatement.

``The revolution in deep nets has been very profound, it definitely surprised me, even though I was sitting right there.’’.

— Sergey Brin, Google co-founder

So, why then?

The key reason is that Deep Learning scales.

Neural networks are unique in their ability to improve their performance with increasing amounts of data. As illustrated in the now classic explanation diagram of Figure 10, other machine learning techniques, which were popular before, do not scale as effectively.

The availability of massive datasets and the development of powerful, low-cost computing hardware (especially Graphics Processing Units, or GPUs) created the perfect conditions for deep learning to flourish. While other methods plateaued, deep learning models could continue to improve by training on billions of examples instead of just thousands.

The tipping point for computer vision was in 2012, and for machine translation, it was around 2014.

Global Reach

Since these early successes, deep learning has been successfully applied to a wide range of fields in research, industry, and society. Some examples include: self-driving cars, medical image analysis for cancer detection, speech recognition and synthesis, drug discovery and toxicology (see DeepMind’s AlphaFold project), customer relationship management, recommendation systems, bioinformatics, advertising, and even controlling lasers.

Genericity and Systematicity

One of the most significant advantages of deep learning is its capacity to automatically learn features directly from data. This capability often allows it to surpass the performance of traditional, approaches that require extensive time and expert knowledge to create useful features from the data. In the early days of deep learning, it was common for even a simple master’s project to beat complex, state-of-the-art algorithms from teams of world experts on its first attempt. This made deep learning a powerful and generalisable approach for solving problems across a wide range of domains.

Simplicity and Democratisation

Deep learning provides a relatively simple and flexible framework for defining and optimising a wide range of models. With modern deep learning libraries, programmers can train state-of-the-art neural networks without needing a decade of research experience in the field. Furthermore, modern AI toolchains, allow developers to build sophisticated software solutions using simple natural language prompts. In fact, coders might not be needed anymore, all you need to do is to write some text. This has created new opportunities for startups and has made A.I. a ubiquitous tool in the industry.

Impact

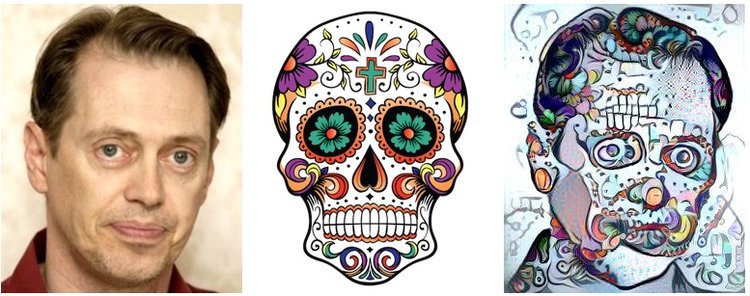

The rapid progress of A.I. raises important questions about the future of work. How long will it be before your job can be automated by an algorithm? Even creative professions are no longer immune.

For example, early deep learning models (2015) could already perform style transfer, applying the artistic style of one image to another:

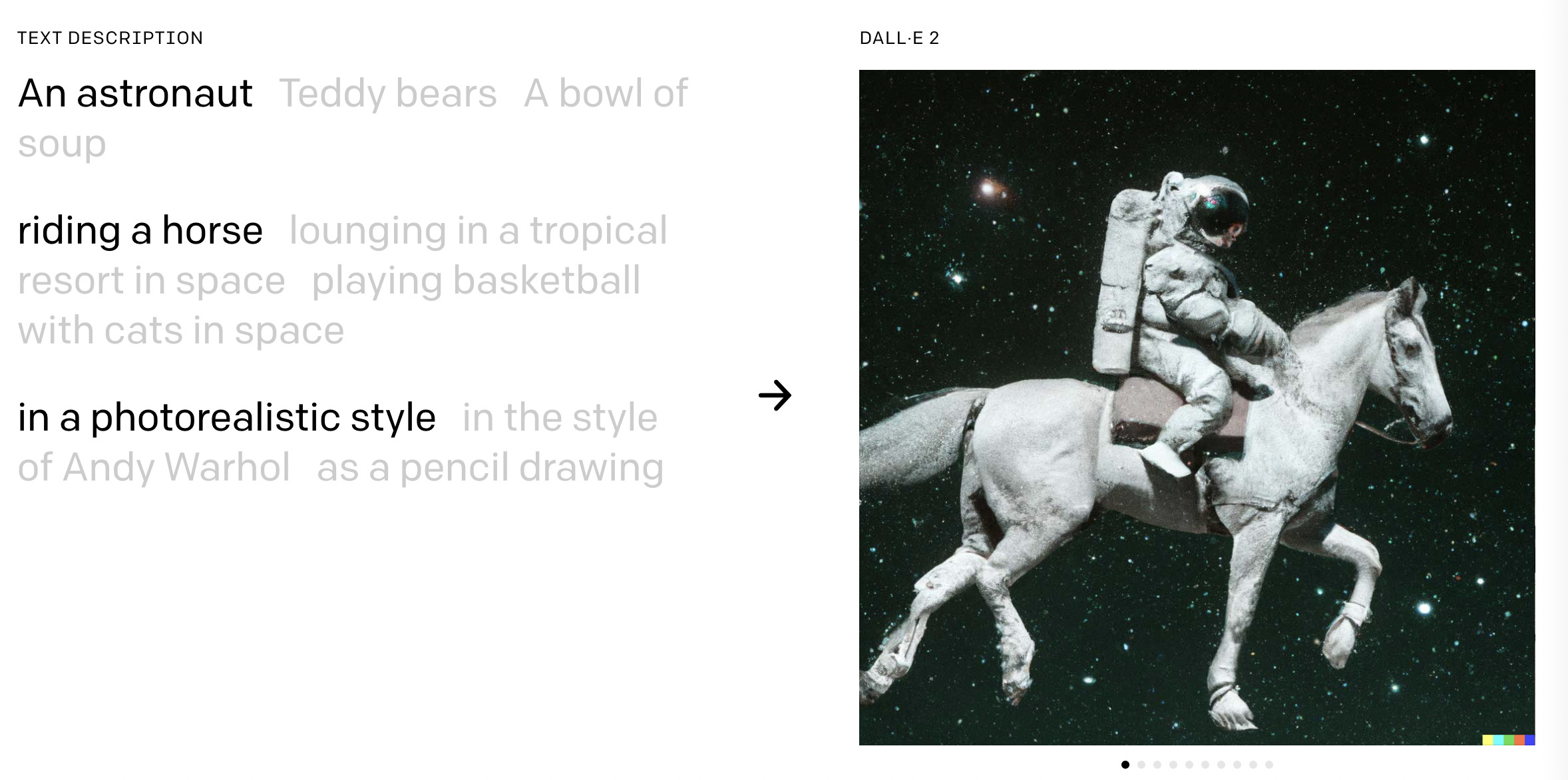

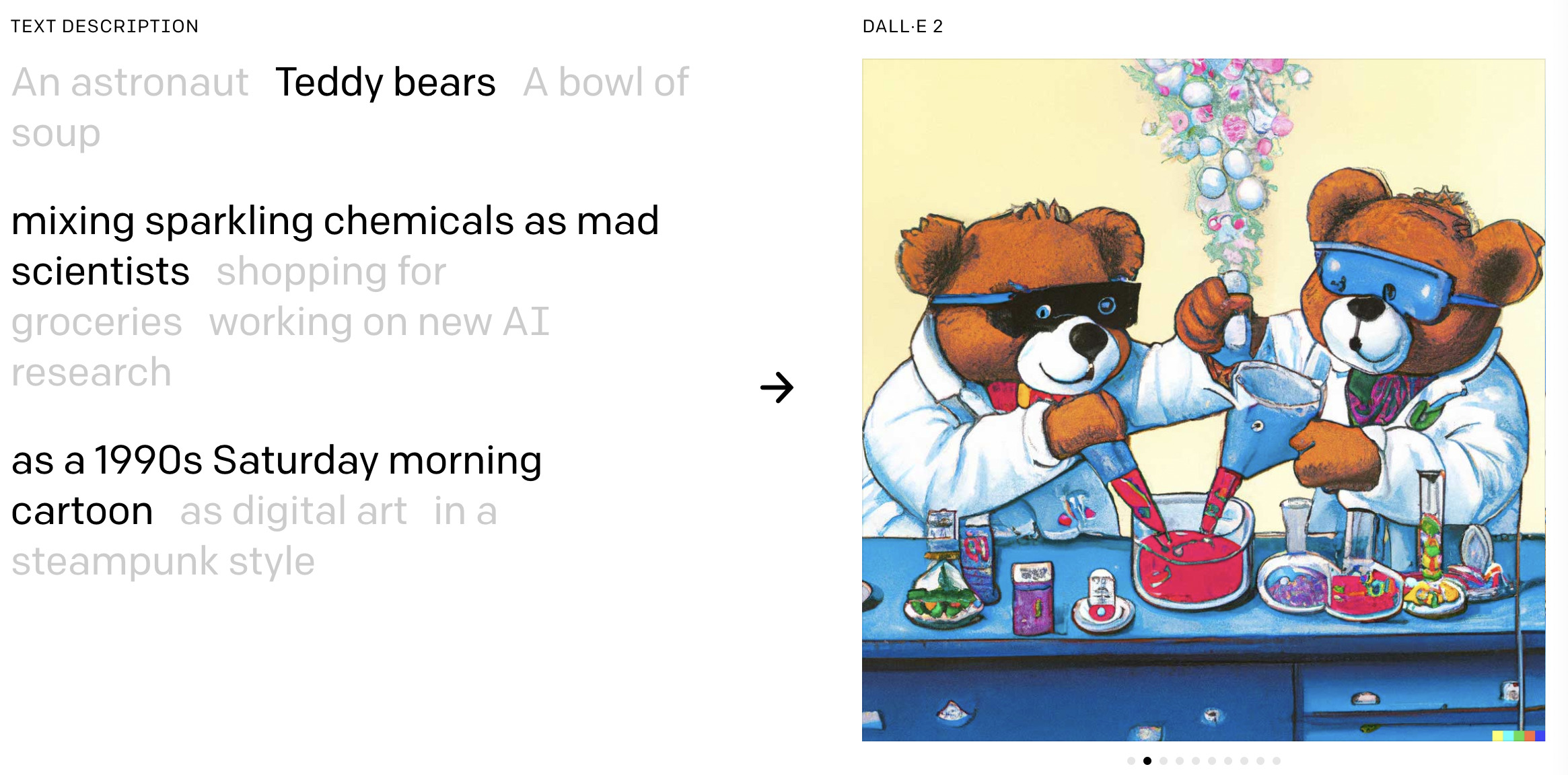

Now large-scale models like DALL·E 2 (Ramesh et al. 2022) can generate incredibly creative and high-quality images from text descriptions:

Concerns about job displacement are serious and time will tell how the disruption will truely impact our society.

In Summary

While fully autonomous cars are not quite yet a reality and computers have not achieved consciousness, the deep learning revolution is well underway. It is profoundly changing how we approach research and engineering, and its impact is being felt across all sectors of society. The growing awareness of the societal and ethical implications of these technologies is a testament to the significance of this transformation.

In the following chapters, we will explore the essential concepts of machine learning (Part I), delve into the fundamentals of neural networks (Part II), more recent advances in the field (Part III), and finish with an introduction to generative models (Part IV).